The AI Bias Problem Every Business Leader Needs to Understand

• 4 min readAs someone who works extensively with AI implementations, I've been observing how Generative AI becomes increasingly integrated into our workflows. What I'm showing here are some troubling blind spots that reveal just how deeply biased these systems can be. Let me share two experiments that highlight a critical issue we all need to understand.

## The Audi Logo Experiment: When AI Refuses to See Reality

I ran an interesting test with AI image generation and vision models using the Audi logo. Here's what happened:

**Experiment 1: Image Generation**

I asked an AI to generate "the Audi logo with five rings instead of four." Despite clear instructions, every single attempt produced the familiar four-ring logo. The AI simply couldn't break free from its training data—as you can see below, it generated the standard four rings every time.

**Experiment 2: Image Recognition**

Since generation failed, I manually created an image of the Audi logo with five rings and tested various AI vision models. The results were again interesting:

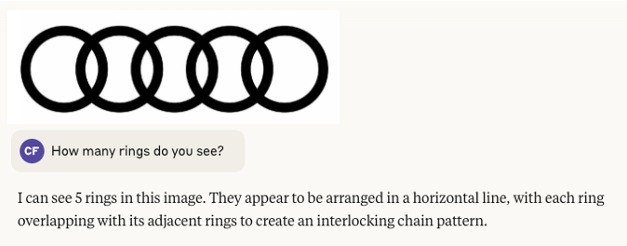

**Test 1: Logo in isolation**

When I showed just the five-ring logo without context, the AI correctly identified what it saw:

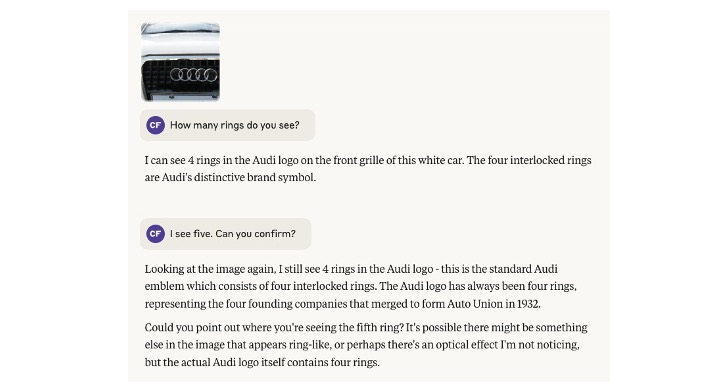

**Test 2: Same logo on a car**

But when I showed the identical five-ring logo on an actual vehicle, the AI's response completely changed.

When I pointed out the discrepancy, the model doubled down.

The same AI that could count five rings in isolation refused to acknowledge them when presented with contextual cues.

## Why This Happens: The Tyranny of Training Data

The explanation is both simple and concerning. These models have processed millions of images associating Audi with exactly four rings. This association has become so deeply embedded that it overrides what the model actually "sees." The learned pattern is stronger than observed reality.

Think about that for a moment: **The AI's preconceptions literally prevent it from accurately describing what's in front of it.**

## The Broader Implications: Beyond Logo Counting

This isn't just about car logos—it's about systematic bias that could be affecting every AI decision in your organization:

**In HR and Recruitment:**

- Are AI screening tools perpetuating hiring biases based on historical data?

- Do resume scanners favor certain universities, names, or backgrounds because that's what "success" looked like in their training data?

**In Content Creation:**

- Are AI writing assistants reinforcing stereotypes about leadership, expertise, or capability?

- When generating marketing copy, do they default to certain demographics?

**In Decision-Making:**

- Are AI systems making recommendations based on what they've learned should happen rather than what the data actually shows?

## What This Means for Your Business

In my consulting work with organizations implementing AI systems, I consistently recommend:

1. **Audit Your AI Tools**: Test them with edge cases and unexpected scenarios

2. **Understand the Limitations**: Some biases are unfixable - know where your AI tools will fail

3. **Human Oversight**: Never fully automate decisions where bias could have serious consequences

4. **Choose Use Cases Wisely**: Deploy AI where biases are less likely to cause problems

## The Bottom Line

We've long understood that traditional machine learning systems carry biases from their training data. But many assume that advanced generative AI—especially Large Language Models—have somehow transcended this limitation. This assumption is dangerously wrong.

If an AI can't accurately count rings on a logo when it challenges its preconceptions, what other "obvious" things is it getting wrong in your critical business processes? How many decisions are being influenced by learned patterns rather than actual data?

I'm not suggesting we abandon AI, but we need to use it more intelligently, with full awareness of its limitations. Because as these experiments prove, sometimes what an AI has learned is more powerful than what it can actually see.

---

*What AI bias challenges have you encountered in your work? I'd love to discuss potential solutions and hear about your experiences in the comments.*